GitHub地址(附代码) : iOS AVAsset

简书地址 : iOS AVAsset

博客地址 : iOS AVAsset

掘金地址 : iOS AVAsset

知识预备

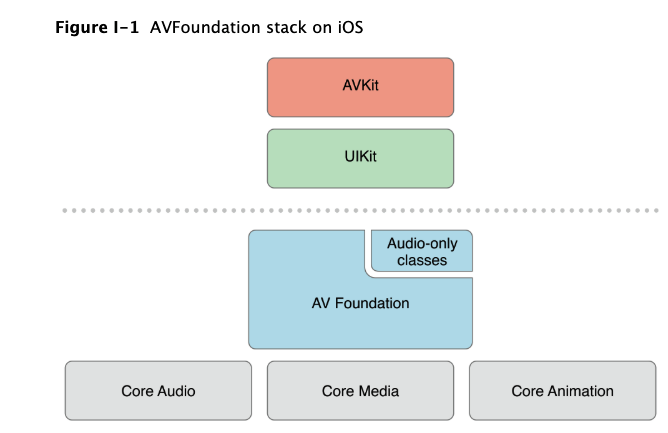

AVFoundation概览

AVFoundation是一个底层可以用来实时捕捉与播放的框架.苹果提供了回调可以获取每帧视频数据.如果你仅仅是想播放一段视频而不对视频做一些特殊处理,则您可以简单的使用上层框架如AVKit framework,

- AVAudioPlayer: 播放音频文件

- AVAudioRecorder: 录制音频文件

- AVAsset: 一个或多个媒体数据(音频轨道,视频轨道)的集合

- Reading, Writing, and Reencoding Assets: 读取,写出,重新编码

- Thumbnails: 使用AVAssetImageGenerator生成缩略图

- Editing: 对获取的视频文件做一些编辑操作:改变背景颜色,透明度,快进等等…

- Still and Video Media Capture: 使用capture session来捕捉此相机的视频数据与麦克风的音频数据.

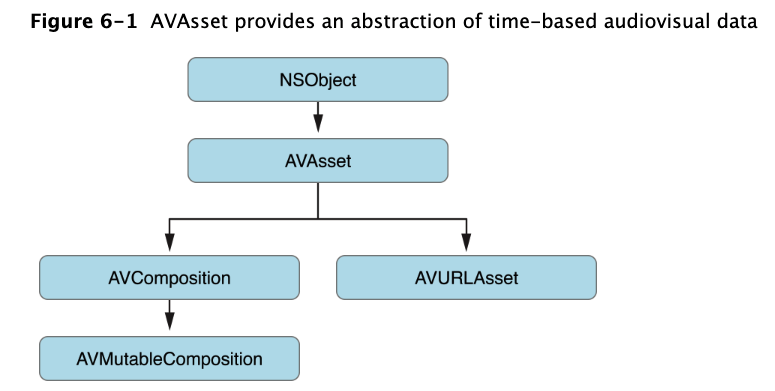

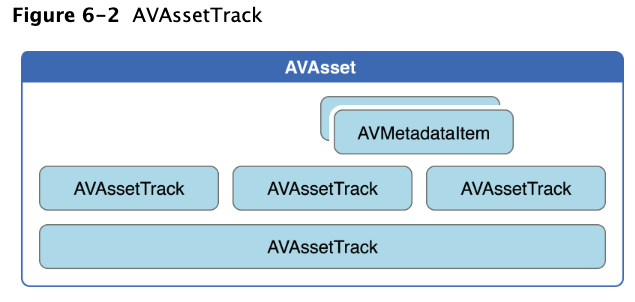

Asset的表示

AVAsset是AVFoundation框架中的核心的类,它提供了基于时间的音视频数据.(如电影文件,视频流),一个asset包含很多轨道的结合,如audio, video, text, closed captions, subtitles…AVMetadataItem:提供了一个asset相关的所有资源信息.AVAssetTrack: 一个轨道可以代表一个音频轨道或视频轨道

AVAsset代表了一种基于时间的音视频数据的抽象类型,其结构决定了很多框架的工作原理.AVFoundation中一些用于代表时间与媒体数据的sample buffer来自Core Media框架.

Media的表示

- CMSampleBuffer: 表示视频帧数据

- CMSampleBufferGetPresentationTimeStamp,CMSampleBufferGetDecodeTimeStamp: 获取原始时间与解码时间戳

- CMFormatDescriptionRef: 格式信息

- CMGetAttachment: 获取元数据

1

2

3

4

5

6CMSampleBufferRef sampleBuffer = <#Get a sample buffer#>;

CFDictionaryRef metadataDictionary =

CMGetAttachment(sampleBuffer, CFSTR("MetadataDictionary", NULL);

if (metadataDictionary) {

// Do something with the metadata.

}

CMTime

CMTime是一个C语言结构类型的有理数,它使用分子(int64_t)与分母(int32_t)表示时间. AVFoundation中关于时间的代码均使用此数据结构,所以务必了解其使用规则.

- Using CMTime

1 | CMTime time1 = CMTimeMake(200, 2); // 200 half-seconds |

- 特定CMTime的值

- kCMTimeZero

- kCMTimePositiveInfinity

- kCMTimeInvalid

- kCMTimeNegativeInfinity

1 | CMTime myTime = <#Get a CMTime#>; |

- CMTime作为一个对象

可以用CMTimeCopyAsDictionary与CMTimeMakeFromDictionary将CMTime转换为CFDictionary.还可以使用CMTimeCopyDescription获取一个代表CMTime的字符串

- CMTimeRange表示一个时间段

CMTimeRange是一个拥有开始时间与持续时间的C语言数据结构.

比较

- CMTimeRangeContainsTime

- CMTimeRangeEqual

- CMTimeRangeContainsTimeRange

- CMTimeRangeGetUnion

1

CMTimeRangeContainsTime(range, CMTimeRangeGetEnd(range))

特定的CMTimeRange

- kCMTimeRangeInvalid

- kCMTimeRangeZero

1 | CMTimeRange myTimeRange = <#Get a CMTimeRange#>; |

- 转换

使用CMTimeRangeCopyAsDictionary与CMTimeRangeMakeFromDictionary将CMTimeRange转为CFDictionary.

1. Assets使用

定义: Assets 可以来自一个文件或用户的相册,可以理解为多媒体资源

创建Asset对象时,我们无法立即获取其所有数据, 因为含音视频的资源文件可能很大,系统需要花时间遍历它.一旦获取到asset后,可以从中提取静态图像, 或者将它转码为其他格式, 亦或是做裁剪操作.

1.1. 创建Asset对象

通过URL作为一个asset对象的标识. 这个URL可以是本地文件路径或网络流1

2NSURL *url = <#A URL that identifies an audiovisual asset such as a movie file#>;

AVURLAsset *anAsset = [[AVURLAsset alloc] initWithURL:url options:nil];

1.2. 初始化属性

AVURLAsset对象的初始化方式是用url与字典作为参数.

- 字典中的key是AVURLAssetPreferPreciseDurationAndTimingKey枚举中的值

- AVURLAssetPreferPreciseDurationAndTimingKey是一个Bool类型的值,他决定了是否应准备好指示精确的持续时间并按时间提供精确的随机访问。

- 获取精确的时间需要大量的处理开销. 使用近似时间开销较小且可以满足播放功能.

- 如果仅仅想播放asset,可以设置nil,它将默认为NO

- 如果想要用asset做一个合成操作,我们需要一个精确的访问.则需要设置为true.

1 | NSURL *url = <#A URL that identifies an audiovisual asset such as a movie file#>; |

1.3. 访问用户相册

我们可以获取用户相册中的视频资源

- iPod: MPMediaQuery

- iPhone: ALAssetsLibrary

1 | ALAssetsLibrary *library = [[ALAssetsLibrary alloc] init]; |

1.4. 使用

初始化asset并意味着你检索的信息可以马上使用. 它可能需要一定时间去计算视频的信息.因此我们需要使用block异步接受处理的结果.

使用AVAsynchronousKeyValueLoading协议.

- a property using statusOfValueForKey:error: : 测试一个值是否被加载

1 | NSURL *url = <#A URL that identifies an audiovisual asset such as a movie file#>; |

1.5.从Video中获取静止图像

为了从asset的播放回调中获取像缩略图这样的静态图片,需要使用 AVAssetImageGenerator对象.可以使用tracksWithMediaCharacteristic:.测试asset是否有具有视频信息.

1 | AVAsset anAsset = <#Get an asset#>; |

1.6. 生成一张图片

可以使用copyCGImageAtTime:actualTime:error:在特定时间生成一张图片. AVFoundation不能在一个精准时间生成一张请求的图片.

1 | AVAsset *myAsset = <#An asset#>]; |

1.7.生成一系列图像

为了生成一系列图像,可以使用generateCGImagesAsynchronouslyForTimes:completionHandler:方法生成某个时间段内的连续图片.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33AVAsset *myAsset = <#An asset#>];

// Assume: @property (strong) AVAssetImageGenerator *imageGenerator;

self.imageGenerator = [AVAssetImageGenerator assetImageGeneratorWithAsset:myAsset];

Float64 durationSeconds = CMTimeGetSeconds([myAsset duration]);

CMTime firstThird = CMTimeMakeWithSeconds(durationSeconds/3.0, 600);

CMTime secondThird = CMTimeMakeWithSeconds(durationSeconds*2.0/3.0, 600);

CMTime end = CMTimeMakeWithSeconds(durationSeconds, 600);

NSArray *times = @[NSValue valueWithCMTime:kCMTimeZero],

[NSValue valueWithCMTime:firstThird], [NSValue valueWithCMTime:secondThird],

[NSValue valueWithCMTime:end]];

[imageGenerator generateCGImagesAsynchronouslyForTimes:times

completionHandler:^(CMTime requestedTime, CGImageRef image, CMTime actualTime,

AVAssetImageGeneratorResult result, NSError *error) {

NSString *requestedTimeString = (NSString *)

CFBridgingRelease(CMTimeCopyDescription(NULL, requestedTime));

NSString *actualTimeString = (NSString *)

CFBridgingRelease(CMTimeCopyDescription(NULL, actualTime));

NSLog(@"Requested: %@; actual %@", requestedTimeString, actualTimeString);

if (result == AVAssetImageGeneratorSucceeded) {

// Do something interesting with the image.

}

if (result == AVAssetImageGeneratorFailed) {

NSLog(@"Failed with error: %@", [error localizedDescription]);

}

if (result == AVAssetImageGeneratorCancelled) {

NSLog(@"Canceled");

}

}];

另外,调用cancelAllCGImageGeneration可以取消上面正在生成的图片.

1.8.裁剪,转码一个视频文件

可以使用AVAssetExportSession对象对视频做格式转码,裁剪功能.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15AVAsset *anAsset = <#Get an asset#>;

NSArray *compatiblePresets = [AVAssetExportSession exportPresetsCompatibleWithAsset:anAsset];

if ([compatiblePresets containsObject:AVAssetExportPresetLowQuality]) {

AVAssetExportSession *exportSession = [[AVAssetExportSession alloc]

initWithAsset:anAsset presetName:AVAssetExportPresetLowQuality];

// Implementation continues.

}

exportSession.outputURL = <#A file URL#>;

exportSession.outputFileType = AVFileTypeQuickTimeMovie;

CMTime start = CMTimeMakeWithSeconds(1.0, 600);

CMTime duration = CMTimeMakeWithSeconds(3.0, 600);

CMTimeRange range = CMTimeRangeMake(start, duration);

exportSession.timeRange = range;

使用exportAsynchronouslyWithCompletionHandler:.方法将创建一个新的文件.1

2

3

4

5

6

7

8

9

10

11

12

13[exportSession exportAsynchronouslyWithCompletionHandler:^{

switch ([exportSession status]) {

case AVAssetExportSessionStatusFailed:

NSLog(@"Export failed: %@", [[exportSession error] localizedDescription]);

break;

case AVAssetExportSessionStatusCancelled:

NSLog(@"Export canceled");

break;

default:

break;

}

}];

可以使用cancelExport取消导出操作

导出操作可能因为一下原因失败

- 有来电显示

- 有别的应用程序开始播放音频当程序进入后台

2. Playback

需要使用AVPlayer对象播放asset.

2.1 播放assets

- 使用AVPlayer播放一个asset

- 使用AVQueuePlayer播放一定数量的items.

2.2 处理不同类型asset

- 基于文件

- 创建一个AVURLAsset

- 使用asset创建一个AVPlayerItem

- 使用AVPlayer关联AVPlayerItem

- 使用KVO检测item状态变化

- 基于HTTP流

1

2

3

4

5

6

NSURL *url = [NSURL URLWithString:@"<#Live stream URL#>];

// You may find a test stream at <http://devimages.apple.com/iphone/samples/bipbop/bipbopall.m3u8>.

self.playerItem = [AVPlayerItem playerItemWithURL:url];

[playerItem addObserver:self forKeyPath:@"status" options:0 context:&ItemStatusContext];

self.player = [AVPlayer playerWithPlayerItem:playerItem];

2.3 播放Item

1 | - (IBAction)play:sender { |

改变播放速率

- canPlayReverse : 是否支持倒放

- canPlaySlowReverse:0.0 and -1.0

- canPlayFastReverse : less than -1.0

1

2

3

4aPlayer.rate = 0.5;

aPlayer.rate = 1; // 正常播放

aPlayer.rate = 0; // 暂停

aPlayer.rate = -0.5; // 倒放

重新定位播放头:主要用于调节播放视频的位置,及播放完后重置播放头

- seekToTime:针对性能

- seekToTime:toleranceBefore:toleranceAfter: 针对精确度

1

2

3

4

5

6CMTime fiveSecondsIn = CMTimeMake(5, 1);

[player seekToTime:fiveSecondsIn];

CMTime fiveSecondsIn = CMTimeMake(5, 1);

[player seekToTime:fiveSecondsIn toleranceBefore:kCMTimeZero toleranceAfter:kCMTimeZero];

播放完后,应该重新将播放头设置为0,以便下次继续播放1

2

3

4

5

6

7

8

9

10// Register with the notification center after creating the player item.

[[NSNotificationCenter defaultCenter]

addObserver:self

selector:@selector(playerItemDidReachEnd:)

name:AVPlayerItemDidPlayToEndTimeNotification

object:<#The player item#>];

- (void)playerItemDidReachEnd:(NSNotification *)notification {

[player seekToTime:kCMTimeZero];

}

2.4. 播放多个Items

可以使用play播放多个Items, 它们将按顺序播放.

- advanceToNextItem:跳过下一个

- insertItem:afterItem: 插入一个

- removeItem:删除一个

- removeAllItems: 删除所有

1

2

3

4

5

6

7NSArray *items = <#An array of player items#>;

AVQueuePlayer *queuePlayer = [[AVQueuePlayer alloc] initWithItems:items];

AVPlayerItem *anItem = <#Get a player item#>;

if ([queuePlayer canInsertItem:anItem afterItem:nil]) {

[queuePlayer insertItem:anItem afterItem:nil];

}

2.5. 监听播放

比如

- 如果用户切换到别的APP, rate会降到0

- 播放远程媒体时,item的loadedTimeRanges and seekableTimeRanges等更多数据的可用性发生变化

- currentItem属性在Item通过HTTP live stream创建

- item的track属性也变化如果当前正在播放HTTP live stream.

- item的stataus属性也会随着播放失败的原因而变化

我们应该在主线程注册和删除通知

响应状态的变化

当item的状态发生变化时,我们可以通知中得知.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17- (void)observeValueForKeyPath:(NSString *)keyPath ofObject:(id)object

change:(NSDictionary *)change context:(void *)context {

if (context == <#Player status context#>) {

AVPlayer *thePlayer = (AVPlayer *)object;

if ([thePlayer status] == AVPlayerStatusFailed) {

NSError *error = [<#The AVPlayer object#> error];

// Respond to error: for example, display an alert sheet.

return;

}

// Deal with other status change if appropriate.

}

// Deal with other change notifications if appropriate.

[super observeValueForKeyPath:keyPath ofObject:object

change:change context:context];

return;

}

- 时间追踪

- addPeriodicTimeObserverForInterval:queue:usingBlock:

- addBoundaryTimeObserverForTimes:queue:usingBlock:

1 | // Assume a property: @property (strong) id playerObserver; |

- 播放结束

注册AVPlayerItemDidPlayToEndTimeNotification当播放结束1

2

3

4[[NSNotificationCenter defaultCenter] addObserver:<#The observer, typically self#>

selector:@selector(<#The selector name#>)

name:AVPlayerItemDidPlayToEndTimeNotification

object:<#A player item#>];

2.6. 使用AVPlayerLayer 播放视频文件

2.6.1. 步骤

- 配置AVPlayerLayer

- 创建AVPlayer

- 创建一个基于asset的AVPlayerItem对象并使用KVO观察他的状态

- 准备播放

- 播放完成后恢复播放头

2.6.2. The Player View

1 | #import <UIKit/UIKit.h> |

2.6.3. A Simple View Controller

1 | @class PlayerView; |

2.6.4. 创建Asset

1 | - (IBAction)loadAssetFromFile:sender { |

2.6.5. 响应状态变化

1 | - (void)observeValueForKeyPath:(NSString *)keyPath ofObject:(id)object |

2.6.7. 播放Item

1 | - (IBAction)play:sender { |

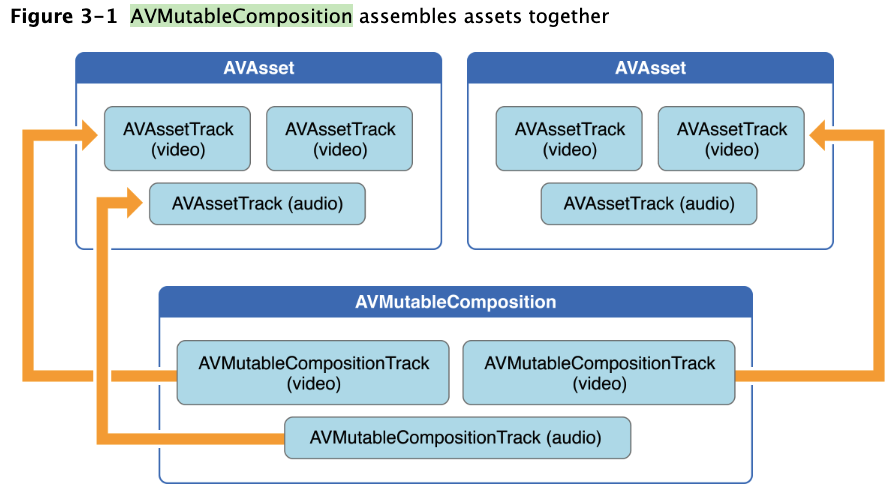

3.Editing

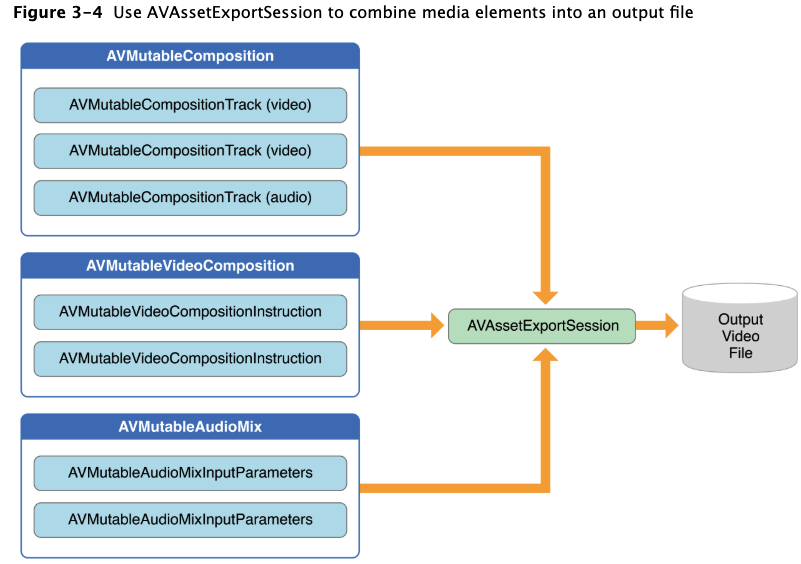

AVFoundation 提供了一组丰富功能的类去编辑asset, 编辑的核心是组合. 组合是来自一个或多个asset的集合.

AVMutableComposition类提供了插入,删除,管理tracks顺序的界面,下图展示了两个asset结合成一个新的asset.

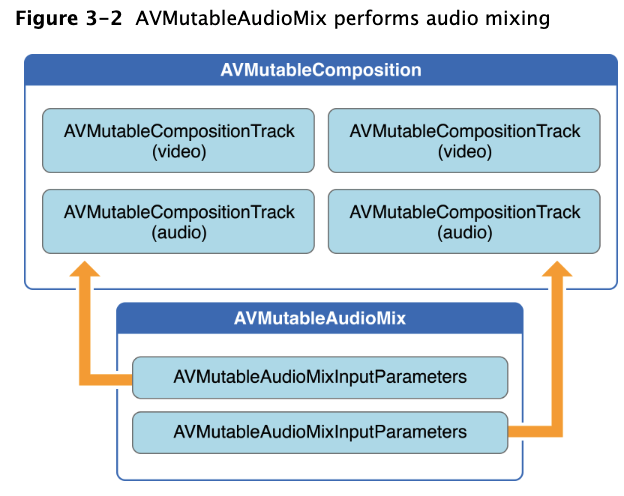

使用AVMutableAudioMix类,可以执行自定义的音频处理.

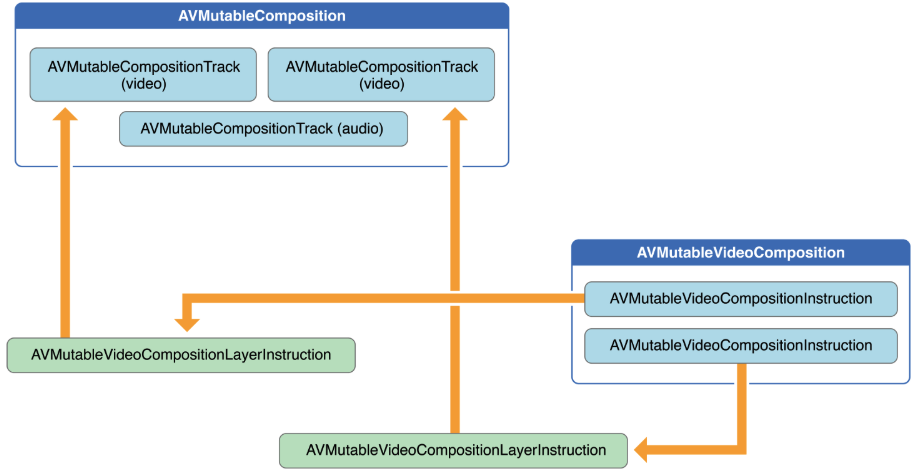

AVMutableVideoComposition: 使用合成的track进行编辑

AVMutableVideoCompositionLayerInstruction: 变换,渐变变换,不透明度,渐变不透明

AVAssetExportSession: 将音视频合成

3.1 新建Composition

使用AVMutableComposition创建对象,然后添加音视频数据,通过AVMutableCompositionTrack添加.1

2

3

4

5AVMutableComposition *mutableComposition = [AVMutableComposition composition];

// Create the video composition track.

AVMutableCompositionTrack *mutableCompositionVideoTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

// Create the audio composition track.

AVMutableCompositionTrack *mutableCompositionAudioTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

kCMPersistentTrackID_Invalid: 将自动为您生成唯一标识符并与轨道关联。

类型

- AVMediaTypeVideo

- AVMediaTypeAudio

- AVMediaTypeSubtitle

- AVMediaTypeText.

3.2. 添加音视频数据

1 | // You can retrieve AVAssets from a number of places, like the camera roll for example. |

3.3. 生成一个音量坡度

AVMutableAudioMix对象可以单独地对你的合成的全部音频执行自定义处理,1

2

3

4

5

6

7AVMutableAudioMix *mutableAudioMix = [AVMutableAudioMix audioMix];

// Create the audio mix input parameters object.

AVMutableAudioMixInputParameters *mixParameters = [AVMutableAudioMixInputParameters audioMixInputParametersWithTrack:mutableCompositionAudioTrack];

// Set the volume ramp to slowly fade the audio out over the duration of the composition.

[mixParameters setVolumeRampFromStartVolume:1.f toEndVolume:0.f timeRange:CMTimeRangeMake(kCMTimeZero, mutableComposition.duration)];

// Attach the input parameters to the audio mix.

mutableAudioMix.inputParameters = @[mixParameters];

3.4 自定义视频处理

AVMutableVideoComposition对象在你的视频合成轨道中执行所有自定义的处理.你可以直接设置渲染尺寸,scal, 帧率在你合成的视频轨道上.

改变背影颜色

1

2

3AVMutableVideoCompositionInstruction *mutableVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

mutableVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, mutableComposition.duration);

mutableVideoCompositionInstruction.backgroundColor = [[UIColor redColor] CGColor];应用不透明坡度

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35AVAsset *firstVideoAssetTrack = <#AVAssetTrack representing the first video segment played in the composition#>;

AVAsset *secondVideoAssetTrack = <#AVAssetTrack representing the second video segment played in the composition#>;

// Create the first video composition instruction.

AVMutableVideoCompositionInstruction *firstVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set its time range to span the duration of the first video track.

firstVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration);

// Create the layer instruction and associate it with the composition video track.

AVMutableVideoCompositionLayerInstruction *firstVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:mutableCompositionVideoTrack];

// Create the opacity ramp to fade out the first video track over its entire duration.

[firstVideoLayerInstruction setOpacityRampFromStartOpacity:1.f toEndOpacity:0.f timeRange:CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration)];

// Create the second video composition instruction so that the second video track isn't transparent.

AVMutableVideoCompositionInstruction *secondVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set its time range to span the duration of the second video track.

secondVideoCompositionInstruction.timeRange = CMTimeRangeMake(firstVideoAssetTrack.timeRange.duration, CMTimeAdd(firstVideoAssetTrack.timeRange.duration, secondVideoAssetTrack.timeRange.duration));

// Create the second layer instruction and associate it with the composition video track.

AVMutableVideoCompositionLayerInstruction *secondVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:mutableCompositionVideoTrack];

// Attach the first layer instruction to the first video composition instruction.

firstVideoCompositionInstruction.layerInstructions = @[firstVideoLayerInstruction];

// Attach the second layer instruction to the second video composition instruction.

secondVideoCompositionInstruction.layerInstructions = @[secondVideoLayerInstruction];

// Attach both of the video composition instructions to the video composition.

AVMutableVideoComposition *mutableVideoComposition = [AVMutableVideoComposition videoComposition];

mutableVideoComposition.instructions = @[firstVideoCompositionInstruction, secondVideoCompositionInstruction];

Incorporating Core Animation Effects

A video composition can add the power of Core Animation to your composition through the animationTool property. Through this animation tool, you can accomplish tasks such as watermarking video and adding titles or animating overlays. Core Animation can be used in two different ways with video compositions: You can add a Core Animation layer as its own individual composition track, or you can render Core Animation effects (using a Core Animation layer) into the video frames in your composition directly. The following code displays the latter option by adding a watermark to the center of the video:

CALayer *watermarkLayer = <#CALayer representing your desired watermark image#>;

CALayer *parentLayer = [CALayer layer];

CALayer *videoLayer = [CALayer layer];

parentLayer.frame = CGRectMake(0, 0, mutableVideoComposition.renderSize.width, mutableVideoComposition.renderSize.height);

videoLayer.frame = CGRectMake(0, 0, mutableVideoComposition.renderSize.width, mutableVideoComposition.renderSize.height);

[parentLayer addSublayer:videoLayer];

watermarkLayer.position = CGPointMake(mutableVideoComposition.renderSize.width/2, mutableVideoComposition.renderSize.height/4);

[parentLayer addSublayer:watermarkLayer];

mutableVideoComposition.animationTool = [AVVideoCompositionCoreAnimationTool videoCompositionCoreAnimationToolWithPostProcessingAsVideoLayer:videoLayer inLayer:parentLayer];结合Core Animation动画效果

video composition通过animationTool属性添加Core Animation中的动画到合成轨道中.你可以利用它去做视频水印,添加标题,动画叠加等操作.

Core Animation主要用于以下两方面

- 你可以把Core Animation图层添加到自己的合成轨道

- 直接将 Core Animation 效果渲染到你合成轨道的视频帧中.

1 | CALayer *watermarkLayer = <#CALayer representing your desired watermark image#>; |

3.5 组合多个asset, 保存到相册

3.5.1. 创建Composition

使用AVMutableComposition将多个asset组合在一起1

2

3AVMutableComposition *mutableComposition = [AVMutableComposition composition];

AVMutableCompositionTrack *videoCompositionTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeVideo preferredTrackID:kCMPersistentTrackID_Invalid];

AVMutableCompositionTrack *audioCompositionTrack = [mutableComposition addMutableTrackWithMediaType:AVMediaTypeAudio preferredTrackID:kCMPersistentTrackID_Invalid];

3.5.2. 添加assets

1 | AVAssetTrack *firstVideoAssetTrack = [[firstVideoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0]; |

3.5.3. 检查视频方向

一旦添加了音频和视频轨道到composition,请确保视频轨道的方向是正确的.默认,所有视频被指定为横屏方向.如果你的视频是纵向拍摄的,则导出视频无法正确定位.同样地,如果将横向视频与纵向视频结合也将出错.

1 | BOOL isFirstVideoPortrait = NO; |

3.5.4. 视频合成指令

一旦知道视频的兼容方向,可以对视频片段加以说明1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16AVMutableVideoCompositionInstruction *firstVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set the time range of the first instruction to span the duration of the first video track.

firstVideoCompositionInstruction.timeRange = CMTimeRangeMake(kCMTimeZero, firstVideoAssetTrack.timeRange.duration);

AVMutableVideoCompositionInstruction * secondVideoCompositionInstruction = [AVMutableVideoCompositionInstruction videoCompositionInstruction];

// Set the time range of the second instruction to span the duration of the second video track.

secondVideoCompositionInstruction.timeRange = CMTimeRangeMake(firstVideoAssetTrack.timeRange.duration, CMTimeAdd(firstVideoAssetTrack.timeRange.duration, secondVideoAssetTrack.timeRange.duration));

AVMutableVideoCompositionLayerInstruction *firstVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoCompositionTrack];

// Set the transform of the first layer instruction to the preferred transform of the first video track.

[firstVideoLayerInstruction setTransform:firstTransform atTime:kCMTimeZero];

AVMutableVideoCompositionLayerInstruction *secondVideoLayerInstruction = [AVMutableVideoCompositionLayerInstruction videoCompositionLayerInstructionWithAssetTrack:videoCompositionTrack];

// Set the transform of the second layer instruction to the preferred transform of the second video track.

[secondVideoLayerInstruction setTransform:secondTransform atTime:firstVideoAssetTrack.timeRange.duration];

firstVideoCompositionInstruction.layerInstructions = @[firstVideoLayerInstruction];

secondVideoCompositionInstruction.layerInstructions = @[secondVideoLayerInstruction];

AVMutableVideoComposition *mutableVideoComposition = [AVMutableVideoComposition videoComposition];

mutableVideoComposition.instructions = @[firstVideoCompositionInstruction, secondVideoCompositionInstruction];

3.5.5. 设置渲染尺寸和帧率

1 | CGSize naturalSizeFirst, naturalSizeSecond; |

3.5.6. 导出合成的视频

1 | // Create a static date formatter so we only have to initialize it once. |

4. 导出AVAsset

Overview

为了读写asset,必须使用由AVFoundation提供的导出API. AVAssetExportSession类提供了一些导出的方法,如改变文件格式,裁剪asset长度等等.

- AVAssetReader: 当你相对asset内容进行操作时,比如读取音轨以生成波形图

- AVAssetWriter: 从媒体(sample buffers或still images)中生成asset.

asset reader and writer 不适用于实时处理. asset reader不能读取HTTP直播流. 然而,如果你使用asset writer做实时流操作,设置expectsMediaDataInRealTime为YES.对于非实时流的数据如果设置该属性则会报错.

4.1. Reading an Asset

每个AVAssetReader对象仅仅能和一个asset关联,但是这个asset可以包含多个tracks.

创建Asset Reader

1

2

3

4NSError *outError;

AVAsset *someAsset = <#AVAsset that you want to read#>;

AVAssetReader *assetReader = [AVAssetReader assetReaderWithAsset:someAsset error:&outError];

BOOL success = (assetReader != nil);建立Asset Reader输出

创建好asset reader后,至少设置一个输出对象以接收当前正在去读的媒体数据.设置好输出后,请确保alwaysCopiesSampleData为NO以便得到性能的提升.

如果仅仅想要从一个或多个轨道中读取媒体数据并且将其转为不同的格式,可以使用AVAssetReaderTrackOutput类. 通过使用一个单独的轨道输出对象对每个AVAssetTrack对象你想要从asset中读取的.

1 | AVAsset *localAsset = assetReader.asset; |

使用AVAssetReaderAudioMixOutput与AVAssetReaderVideoCompositionOutput类分别读取由AVAudioMix与AVVideoComposition对象合成的媒体数据.通常被用在从AVComposition中读取数据.

1 | AVAudioMix *audioMix = <#An AVAudioMix that specifies how the audio tracks from the AVAsset are mixed#>; |

video也同理1

2

3

4

5

6

7

8

9

10

11

12

13

14AVVideoComposition *videoComposition = <#An AVVideoComposition that specifies how the video tracks from the AVAsset are composited#>;

// Assumes assetReader was initialized with an AVComposition.

AVComposition *composition = (AVComposition *)assetReader.asset;

// Get the video tracks to read.

NSArray *videoTracks = [composition tracksWithMediaType:AVMediaTypeVideo];

// Decompression settings for ARGB.

NSDictionary *decompressionVideoSettings = @{ (id)kCVPixelBufferPixelFormatTypeKey : [NSNumber numberWithUnsignedInt:kCVPixelFormatType_32ARGB], (id)kCVPixelBufferIOSurfacePropertiesKey : [NSDictionary dictionary] };

// Create the video composition output with the video tracks and decompression setttings.

AVAssetReaderOutput *videoCompositionOutput = [AVAssetReaderVideoCompositionOutput assetReaderVideoCompositionOutputWithVideoTracks:videoTracks videoSettings:decompressionVideoSettings];

// Associate the video composition used to composite the video tracks being read with the output.

videoCompositionOutput.videoComposition = videoComposition;

// Add the output to the reader if possible.

if ([assetReader canAddOutput:videoCompositionOutput])

[assetReader addOutput:videoCompositionOutput];

- Reading the Asset’s Media Data

设置好输出后,可以调用startReading开始读取.接下来,使用copyNextSampleBuffer方法从每个输出中单独检索数据

1 | // Start the asset reader up. |

4.2.Writing an Asset

AVAssetWriter: 用于将多个源的媒体数据写入指定格式的单个文件中.你不必去关联你的asset writer对象与一个特定的asset, 但必须使用一个单独的asset为你创建的每个输出文件.

- 创建Asset Writer

指定输出文件的URL与类型1

2

3

4

5

6NSError *outError;

NSURL *outputURL = <#NSURL object representing the URL where you want to save the video#>;

AVAssetWriter *assetWriter = [AVAssetWriter assetWriterWithURL:outputURL

fileType:AVFileTypeQuickTimeMovie

error:&outError];

BOOL success = (assetWriter != nil);

- 建立 Asset Writer输入

为了让asset writer能够写入数据,必须键值至少一个asset writer输入源.1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35// Configure the channel layout as stereo.

AudioChannelLayout stereoChannelLayout = {

.mChannelLayoutTag = kAudioChannelLayoutTag_Stereo,

.mChannelBitmap = 0,

.mNumberChannelDescriptions = 0

};

// Convert the channel layout object to an NSData object.

NSData *channelLayoutAsData = [NSData dataWithBytes:&stereoChannelLayout length:offsetof(AudioChannelLayout, mChannelDescriptions)];

// Get the compression settings for 128 kbps AAC.

NSDictionary *compressionAudioSettings = @{

AVFormatIDKey : [NSNumber numberWithUnsignedInt:kAudioFormatMPEG4AAC],

AVEncoderBitRateKey : [NSNumber numberWithInteger:128000],

AVSampleRateKey : [NSNumber numberWithInteger:44100],

AVChannelLayoutKey : channelLayoutAsData,

AVNumberOfChannelsKey : [NSNumber numberWithUnsignedInteger:2]

};

// Create the asset writer input with the compression settings and specify the media type as audio.

AVAssetWriterInput *assetWriterInput = [AVAssetWriterInput assetWriterInputWithMediaType:AVMediaTypeAudio outputSettings:compressionAudioSettings];

// Add the input to the writer if possible.

if ([assetWriter canAddInput:assetWriterInput])

[assetWriter addInput:assetWriterInput];

AVAsset *videoAsset = <#AVAsset with at least one video track#>;

AVAssetTrack *videoAssetTrack = [[videoAsset tracksWithMediaType:AVMediaTypeVideo] objectAtIndex:0];

assetWriterInput.transform = videoAssetTrack.preferredTransform;

NSDictionary *pixelBufferAttributes = @{

kCVPixelBufferCGImageCompatibilityKey : [NSNumber numberWithBool:YES],

kCVPixelBufferCGBitmapContextCompatibilityKey : [NSNumber numberWithBool:YES],

kCVPixelBufferPixelFormatTypeKey : [NSNumber numberWithInt:kCVPixelFormatType_32ARGB]

};

AVAssetWriterInputPixelBufferAdaptor *inputPixelBufferAdaptor = [AVAssetWriterInputPixelBufferAdaptor assetWriterInputPixelBufferAdaptorWithAssetWriterInput:self.assetWriterInput sourcePixelBufferAttributes:pixelBufferAttributes];

- Writing Media Data

1

2

3CMTime halfAssetDuration = CMTimeMultiplyByFloat64(self.asset.duration, 0.5);

[self.assetWriter startSessionAtSourceTime:halfAssetDuration];

//Implementation continues.

可以使用endSessionAtSourceTime:结束当前正在写的session, 然而,如果你的session将执行到文件末尾, 可以调用finishWriting.

1 | // Prepare the asset writer for writing. |

4.3. 重新编码Assets

1 | NSString *serializationQueueDescription = [NSString stringWithFormat:@"%@ serialization queue", self]; |

4.4 总结: 使用Asset Reader and Writer串联去重新编码Asset

流程

- 使用串行队列处理异步读取与写入的数据.

- 初始化asset reader并且配置两个asset reader的输出(一个video,一个audio)

- 初始化asset writer并且配置两个asset writer的输入出(一个video,一个audio)

- 使用一个asset reader通过两种不同的输入输出结合将数据传给asset writer

- 使用dispatch group通知重新编码的过程完成

- 允许用户取消开始后的重新编码的过程

4.4.1. Handling the Initial Setup

创建三个单独的同步队列去管理读写进程,主队列用于管理asset reader, writer的启动与停止,其他两个队列用于通过输入,输出读取组合串行化读取与写入.1

2

3

4

5

6

7

8

9

10

11

12NSString *serializationQueueDescription = [NSString stringWithFormat:@"%@ serialization queue", self];

// Create the main serialization queue.

self.mainSerializationQueue = dispatch_queue_create([serializationQueueDescription UTF8String], NULL);

NSString *rwAudioSerializationQueueDescription = [NSString stringWithFormat:@"%@ rw audio serialization queue", self];

// Create the serialization queue to use for reading and writing the audio data.

self.rwAudioSerializationQueue = dispatch_queue_create([rwAudioSerializationQueueDescription UTF8String], NULL);

NSString *rwVideoSerializationQueueDescription = [NSString stringWithFormat:@"%@ rw video serialization queue", self];

// Create the serialization queue to use for reading and writing the video data.

self.rwVideoSerializationQueue = dispatch_queue_create([rwVideoSerializationQueueDescription UTF8String], NULL);

1 | self.asset = <#AVAsset that you want to reencode#>; |

4.4.2 初始化Asset Reader and Writer

1 | - (BOOL)setupAssetReaderAndAssetWriter:(NSError **)outError |

4.4.3. 重编码asset

1 | - (BOOL)startAssetReaderAndWriter:(NSError **)outError |

4.4.4. 处理完成

1 | - (void)readingAndWritingDidFinishSuccessfully:(BOOL)success withError:(NSError *)error |

4.4.5. 处理取消

1 | - (void)cancel |

4.5.Asset Output Settings Assistant

The AVOutputSettingsAssistant class aids in creating output-settings dictionaries for an asset reader or writer. This makes setup much simpler, especially for high frame rate H264 movies that have a number of specific presets.

1 | AVOutputSettingsAssistant *outputSettingsAssistant = [AVOutputSettingsAssistant outputSettingsAssistantWithPreset:<some preset>]; |

Other

1 | // Create a UIImage from sample buffer data |